Predicting Large Scale Power Grid Load

This work was done for PJM while working at PointServe. PJM is “a regional transmission organization (RTO) that coordinates the movement of wholesale electricity in all or parts of 13 states and the District of Columbia“.

PJM contracted with us to help them predict, near-term, the overall load on their systems; i.e. how much electricity was going to be used by people in the various states they supported. This was meant to help with a wasteful resources and time cost problem they were trying to solve, where they would find themselves either producing too much or too little power for the needs of their customers. Since it takes time as well as resources to alter production, being able to anticipate load made it possible for them to save millions of dollars by informing strategic raising and lowering of electricity production to better match need.

I employed a number of techniques to try to solve the problem, including some recurrent back propagation networks, taking into consideration historical and current load data alongside historical and current weather data.

The reseach was successful in that I was able to predict within 2.5% accuracty 4 hours out into the future.

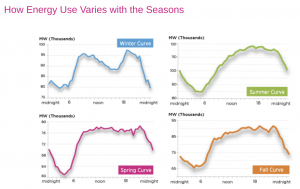

The findings were pretty proprietary, so the image below from the PJM website is indicative of the sort of data we were working with.

“SARDNET: A Self-Orgnizing Feature Map for Sequences”

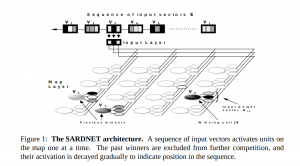

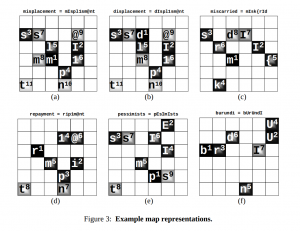

This neural network architecture is a variation on a Kohonen self-organizing neural network that incorporates a sort of short term memory that “fades” over time, meant to be analagous to biological memory. This network was successfully applied to speech pattern (word) learning and recognition.

Daniel L. James and Risto Miikkulainen Department of Computer Sciences The University of Texas at Austin Austin, TX 78712

Abstract: A self-organizing neural network for sequence classification called SARDNET is described and analyzed experimentally. SARDNET extends the Kohonen Feature Map architecture with activation retention and decay in order to create unique distributed response patterns for different sequences. SARDNET yields extremely dense yet descriptive representations of sequential input in very few training iterations. The network has proven successful on mapping arbitrary sequences of binary and real numbers, as well as phonemic representations of English words. Potential applications include isolated spoken word recognition and cognitive science models of sequence processing.